Infographic Descriptions

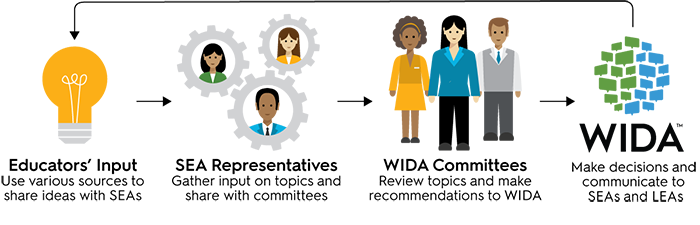

Educator Participation Flow

This graphic represents the process for educators to share their ideas with WIDA. The process begins with educators’ input: an educator comes up with an idea and contacts their SEA representative. Then, the SEA shares the information with the appropriate WIDA committees for further review. Next, the WIDA committees review the idea and make a recommendation to WIDA. Finally, WIDA reviews the recommendation, makes a decision and shares it back with SEAs and educators.

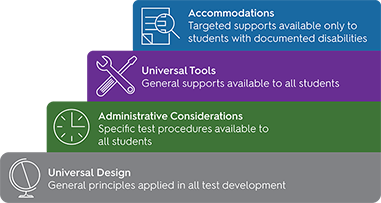

Accessibility and Accommodations Framework

This layered graphic illustrates the point that there are three types of accessibility supports available to all students taking WIDA assessments. The wide base of the graphic is universal design, indicating the foundational idea that all students receive test items that have been developed using universal design principles. The next layer above is administrative considerations, indicating flexibility in the timing, scheduling and setting of the test, if necessary. The layer below the top is universal tools, indicating that tools such as color contrast, highlighter, magnifier and line guides are available to all students taking the test but are generally selected for use by a smaller subset of students. The top layer is accommodations, which are only available to the smallest subset of students – those who have a designated Individual Education Program or 504 Plan.

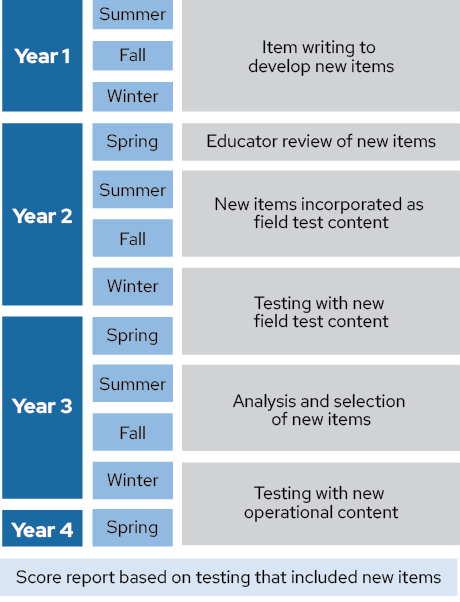

Test Item Development Cycle

This table shows the test development cycle over the course of four years. In year one, from summer through winter item writing to develop new test items takes place. In the spring of year two, there is an educator review of new items. In the summer and fall of year two, new items are incorporated as field test content. In the winter of year two and sprint of year three, there is testing with new field test content. In the summer and fall of year three, analysis and selection of new items happens. In the winter of year three and spring of year four, there is testing with new operational content. Then the score reports are based on testing that included new items.